| Applies to:

PcVue version 8.10 SP2 onwards. |

||||||||||||||||||||||||||||||||||||||||||

|

|

||||||||||||||||||||||||||||||||||||||||||

| Summary:

This article describes important developments in PcVue’s internal mechanisms in version 8.10 SP2. |

||||||||||||||||||||||||||||||||||||||||||

|

|

||||||||||||||||||||||||||||||||||||||||||

| Details:

Logs Management of logs for proprietary archive units – as for HDS archive units. Operation: A station that is a historical server subscribes to the project’s set of stations. A station is considered as a historical server when it produces at least one archive unit. A station that generates log information sends it to the subscribed set of stations. Previously the station that generated logs always sent it to the stations that had subscribed to the variable even though it was not a historical server. Result: Log entries for the starts and ends of operator sessions on all stations of the project are sent to the historical servers. A historical server can log the events on a variable without needing to subscribe to it permanently. (It is even possible not to load the variable but that is inadvisable for the time being, since–for reasons to do with project language–on reading a log some properties are searched for in the database loaded in memory.) The filtering period for trends Previously with the HDS, this functionality was managed in the HDS OPC server. That didn’t guarantee the same VTQ on the redundant servers and so, at the time of subscription, double the number of points could be stored. From version 8.10 SP2, this functionality has been handled at variable subscription. It is the server of the variable that manages the filtering period, so the VTQ transmitted is necessarily the same on the historical servers. The source is now unique and the historical server station doesn’t do any processing of the data. Another change has been made to this functionality. Previously for instance, suppose that the value changed only at the start of a 30-minute period; then the time-stamp sent to the historical server could have a delay of 30 minutes. It caused fragmentation in the SQL database server and hence performance would deteriorate. Now however the time-stamp sent will be the current one at the end of the time-stamp period rounded to a multiple of that period except if the variable is timestamped. For example if the period is 10 seconds and the variable changes every second, the transmitted time-stamps will be: 08:00:00.000, 08:00:10.000, 08:00:20.000. Previously one could have had 07:59:59.523, 08:09:59.123, 08:19:59.045. Warning: there is no synchronization on start-up, so in the example above one can also get 08:00:01.000, 08:00:11.000, 08:00:21.000. Deadbands for trends in HDS This feature only applies to trends stored via the HDS. This property is very useful because it lets you limit data volumes for the SQL database without overheads for displaying the tables (as would be the case if one increased the deadband for the variable). Multiple associations Example of architecture: A central server, SERV_CENTRAL, three local servers. SERVER1, SERVER2 and SERVER3. Only the central server is assigned to provide redundancy for the three local servers. It would be necessary to configure the following associations: ASSOC1 = SERV_CENTRAL + SERVER1 Controlling the data flows (Queue regulation) for multi-stations This is to do with the client stations. The multi-station manager now provides data flow control on the variables produced by remote servers. If the number of variables or data blocks is too large, the threads of the local client connections are suspended. Previously the queues regulation applied only to the variables manager but that is known to be insufficient for large multi-station projects. For instance a 100MB network with some tens of servers serving a client station on a network could be saturated.

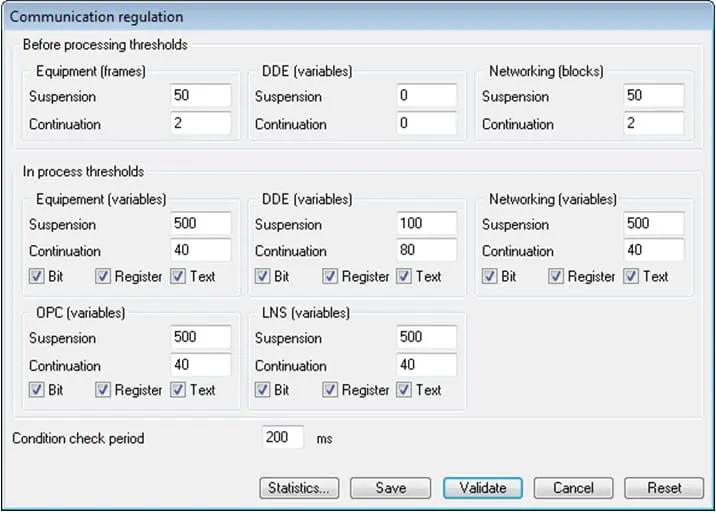

Reminder: you specify the flow control settings in the dialog accessed via the menu Configuration\Communication\Queue Regulation, then its Regulation button. The relevant properties are in the Networking sections. Controlling the alarm data flow (Alarm queue regulation) On a client station, the alarms are now controlled. This became necessary since on a particular project PcVue must manage up to 50,000 alarms. So in a situation of active regulation, it was possible for very fast alarm transitions to be lost. Note: in the regulation process, the real time value and the previous value are stored so as to be able to handle the case of an alarm that is set and reset quickly; whilst for Bit, Register or Text variables only the real time value is stored. Optimization of CimWay start-up time The start and stop times of the devices and frames are now asynchronous across the set of configured networks. Previously all these commands were synchronized. There are still optimizations to make when a return delay is configured in the devices. (note GM: A enlever?) Results obtained: 1) For a project of 712 devices and 2,046 on the Modbus Master TCP network. No device is connected.

Note: 1,333 threads have been created to manage this configuration, one per device and one per unshared TCPIP connection. There may even be some other way of saving time in setting up and deleting of all these threads. 2) For a project of 149 devices and 243 on the Modbus Master TCP network. No device is connected.

Note: 224 threads were created to manage this configuration. Toolkit Manager (previously called the Blank Manager) Software development environment was significantly improved. API Library has been enhanced too

|

||||||||||||||||||||||||||||||||||||||||||

|

|

||||||||||||||||||||||||||||||||||||||||||

|

|

Created on: 29 Jul 2011 Last update: 04 Sep 2024